Enable Record Aggregation in the Kinesis Firehose Agent

Enable record aggregation in the AWS Kinesis Firehose Agent daemon and potentially halve your Firehose costs.

The Firehose Agent is a daemon for

writing data to a Firehose Delivery Stream.

It reads data directly from a file on disk and batches up the records and sends them to Firehose.

This means your application doesn't have to worry about interacting with the Firehose API and can just pipe data

directly into a normal file.

The agent can be configured to buffer data in specific ways.

These options have sensible defaults, except for aggregatedRecordSizeBytes.

This option defaults to 0, meaning no aggregation occurs at all.

Set this to 750000 and save money on your Firehose costs:

{

"flows": [

{

"filePattern": "/data/stream-file.json",

"deliveryStream": "stream-name",

"aggregatedRecordSizeBytes": 750000

}

]

}

A client I worked for had several Firehose streams which handled 130 TB per month. This should have cost about $3,770/mth, but since aggregation wasn't enabled, they were being charged over $10,000/mth. That's a saving of 62%!

Next we will go over how you can determine if you can save money. Finally, an explanation of Firehose billing and why aggregation works.

Cloudwatch Metrics

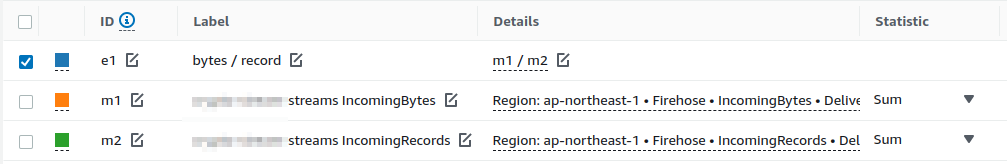

To see if this is a problem for your streams, create a computed metric in Cloudwatch with

IncomingBytes / IncomingRecords:

This will show you the average number of bytes per record, and you can get an idea of how bad it might be.

To get a more detailed view, open up the Cost Explorer and filter by "Kinesis Firehose" as the service an

"BilledBytes" as the Usage Type.

This will show you exactly how many GB you're being charged for.

Compare this with the sum of the IncomingBytes metric for the same period and you can see the exact extra charge.

For instance, if BilledBytes is 10,000 GB and the IncomingBytes sum is 7,000 GB, that's an extra charge of 3,000 GB.

At $0.029/GB, that's $87 more than you should be paying.

How Firehose billing works

When a record is sent to Firehose, it's rounded up to the nearest multiple of 5 KB and this is the amount used for billing. For instance, a 500 byte record will be billed the same as a 4.5 KB record: both at 5 KB. This means if you do not fill up your records to this limit, you will be paying a premium for the service. For small records at 500 bytes each, you pay 10x the cost if you do not aggregate.

More details on the Amazon Kinesis Data Firehose pricing page.

It's worth noting that regular Kinesis Data Streams work the same way, just in 1 KB increments.

How aggregation works

Record aggregation is simple in practice. Suppose you have five records you want to send to Firehose and you've written them to the file for the agent to consume:

{"id":"record1"}

{"id":"record2"}

{"id":"record3"}

{"id":"record4"}

{"id":"record5"}

Without aggregation, these are sent individually, one line per record. The agent will send these in a single PutRecordsBatch request, but billing occurs at the individual record level. Thus, these five records will be billed at 25 KB! This is an extreme example, but it's easy to imagine how this can blow up without warning.

With record aggregation enabled, the agent will send all five lines in a single record, including newlines:

{"id":"record1"}\n{"id":"record2"}\n{"id":"record3"}\n{"id":"record4"}\n{"id":"record5"}\n

This will still be rounded up to the nearest 5 KB, but the idea is that record aggregation would include hundreds of records at a time. When the data finally arrives in S3, it's written as-is and will appear exactly as it was originally written.

Why 750 KB?

The maximum record size for Firehose is 1 MB (1,000,000 bytes).

I chose a configuration for aggregatedRecordSizeBytes at 750000 somewhat arbitrarily.

I like to include a buffer, just in case there's an issue with the agent and it's not able to aggregate fully.

I doubt this is the case and you're probably fine setting it to 1000000, but I'd rather be safe than sorry.

The maximum extra charge will always be 5 KB less 1 byte. For instance, if a record is sent at 745,001 bytes, the extra charge will be 4,999 bytes, which is only 0.7% over what the price should be. I think this is acceptable.